Point clouds

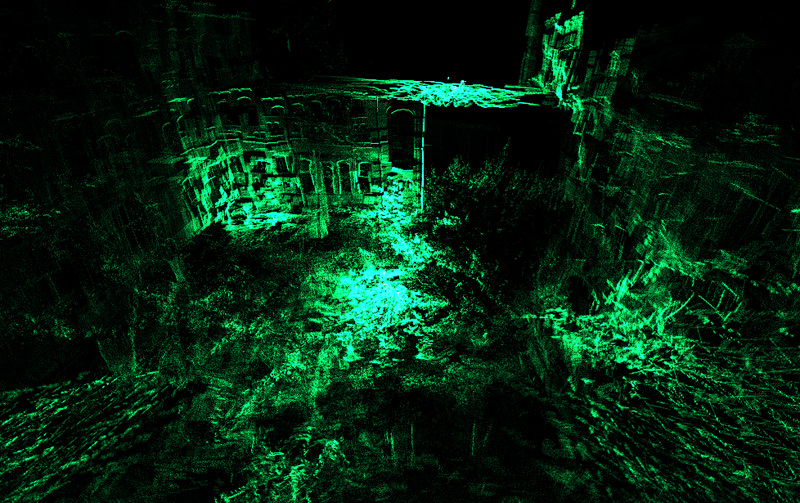

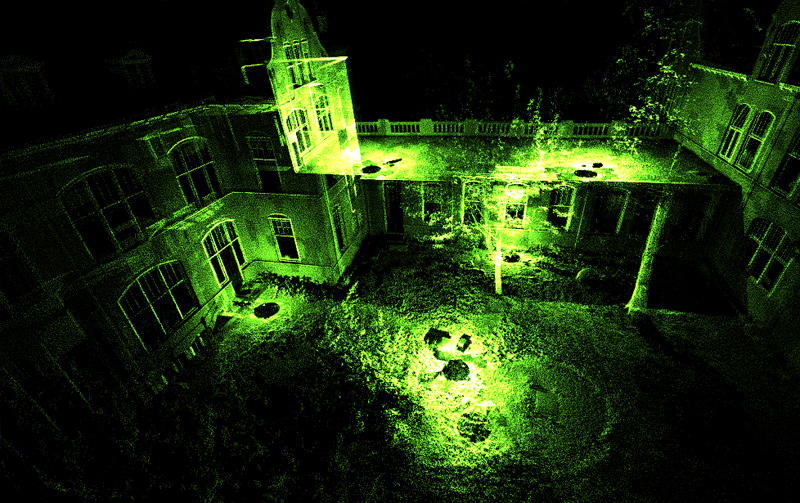

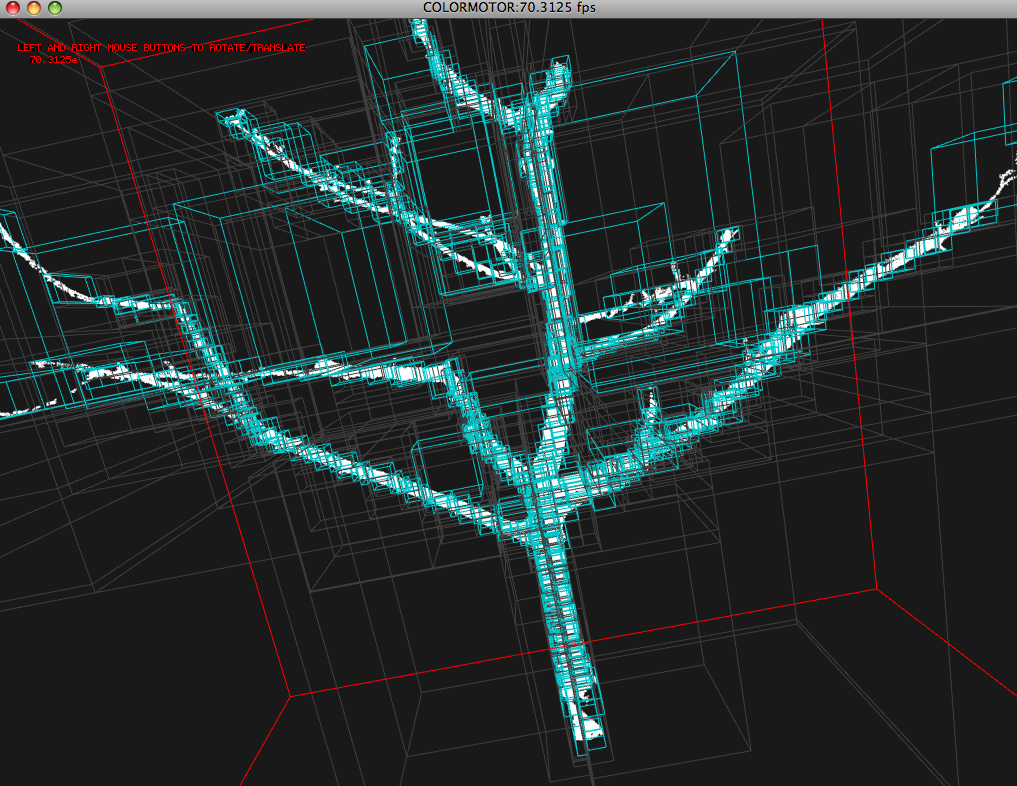

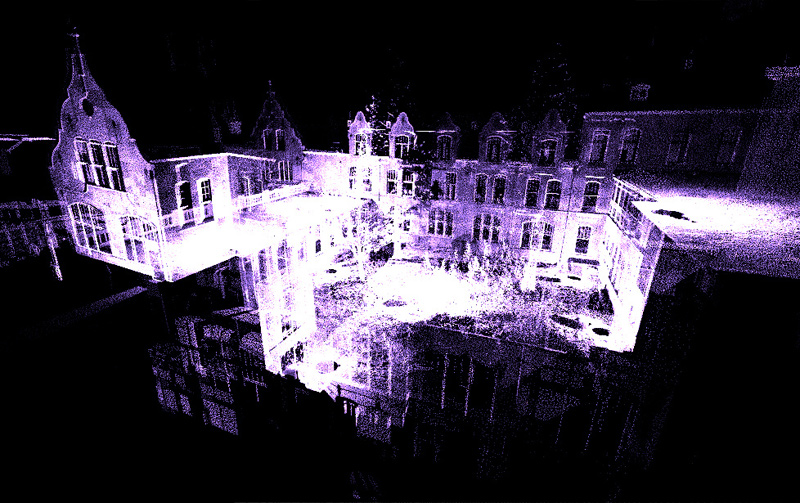

In this project, I developed an audio-reactive renderer for large point-clouds datasets produced by the 3D geoinformation department at Tu Delft. The project started as a commission for Max Hattler, who performed live at the Discovery 2009 festival in Amsteram, and then continued in collaboration with Alexander Bucksch.

The challenge in this project was to render the large pointclouds, consisting of billions of points, at different levels of detail, while allowing real-time modifications of the output. The software used for the live performance at Discovery was implemented as an external plug-in for the Quartz Composer system.

As part of the project, we also used a SwissRanger time-of-flight camera, a precursor to much more affordable and now common devices such as the Kinect.